What Are the Key Benefits of Agentic Observability?

-

Deep Transparency and Insights: These observability tools provide complete transparency of the decisions and actions taken by an AI agent. Because each decision is tracked, it provides chances for developers to explore the data and determine mistakes or inefficiencies.

-

Accelerated debugging and troubleshooting: They also unravel the work by providing the developers with filled logs and real-time alerts, thereby enabling them to rapidly identify and debug problems.

-

Performance Optimization: This would help for further optimizations of LLM-based applications. For example, LangSmith lets a developer understand the efficiency with which LLMs process their data, specifically in terms of token usage, response latency, and general efficiency that helps developers pinpoint bottlenecks within the system.

-

Scalability and Adaptability: As the scale of deployments of AI agents is increasing, so should the scalability of observability offered by the firm. AgentOps is designed specifically for monitoring large multi-agent systems, aggregating information from many sources without degradation in performance.

Where Is Agentic Observability Deployed Across Industries?

-

Manufacturing: Predictive models of maintenance are used to determine the possibility of machine failure in a factory. How the models make decisions can be traced to provide an open insight into how predictions about downtime are formed. This is done to ensure accuracy in the servicing of equipment and distribution of resources.

-

Customer Service and Chatbots: For instance, in e-commerce companies, an AI agent may process thousands of queries each day. If a customer gets the wrong or irrelevant reply, then developers can trace exactly where it went wrong whether it is at the model logic level, due to misinterpretation, or poor data inputs.

-

Healthcare: Medical Diagnosis Systems: Accuracy in healthcare is crucial. AgentOps can track AI agents used in medical diagnosis and always point out patients' data for diagnosis suggestions. Medical providers can be assured that the suggestions offered by the AI are reliable, not biased, and safe upon observing the decision-making process.

-

Finance: Fraud Detection: In the finance sector, AI agents are employed to identify potentially fraudulent transactions. By monitoring its decision-making processes, organizations can trace the rationale behind each flagged transaction. This transparency allows financial institutions to refine their fraud detection models and reduce false positives, ensuring legitimate transactions proceed smoothly while enhancing security.

Integration With Akira AI

The following steps would allow the integration of LangSmith and AgentOps with Akira AI for effective monitoring and workflow management:

APIs: API interfacing from LangSmith and AgentOps can be integrated into the architecture of Akira AI to allow for the smooth-flowing data between tools of observability and those workflows that already exist within Akira.

Custom Dashboards: Akira AI has built-in the integration of dashboards right into the interface. This allows a seamless view and enables users to view agent performance, watch metrics, and identify problems without changing levels.

Data Ingestion: Akira AI will ingest telemetry and logs from LangSmith for LLM observability and AgentOps for multi-agent performance monitoring. In other words, all this collection, processing, and analysis falls within the Akira ecosystem.

Alerting System: It integrates alerting capabilities of LangSmith and AgentOps with Akira AI; in other words, the platform permits a real-time sending of a notification relating to performance anomalies, a bottleneck in decision-making processes, or unexpected agent behavior.

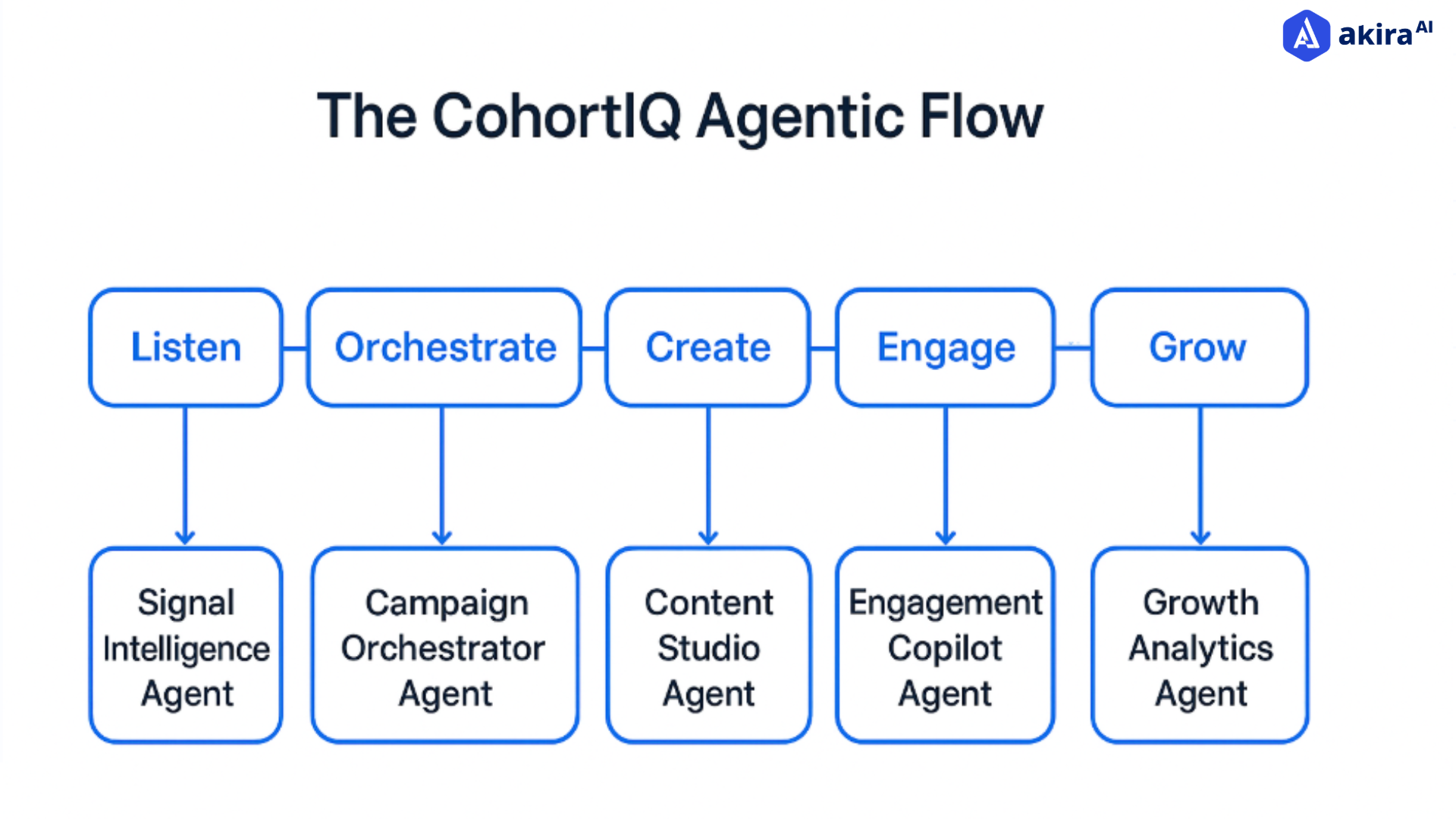

Collaborative Agent Optimization: Such integrations enable Akira AI to generate collaborative analytics for multi-agent systems, thus optimizing LLM-based workflows where the execution of tasks is efficient and agents interact with each other smoothly.

What Challenges Exist in Implementing Agentic Observability?

Although LangSmith and AgentOps offer the value of observability tools, they do come with their challenges.

-

Performance Overhead: Agentic systems whose activity constantly goes through logging and monitoring always incur some performance overheads, especially in resource-poor environments. Data collection will include delays in system operation, specifically when involving applications with high traffic or multi-agent systems with many interactions.

-

Data Volume Management: As the complexity of AI agents increases, the volume of telemetry data is similarly going to escalate. Without proper data management or filtering, the overwhelming amount of information could potentially drown the system and the developers too. Large-scale agent-based systems produce a volume of telemetry data that can't be processed or made meaningful insights from.

-

Integration Complexity: The APIs can allow for integration with LangSmith and AgentOps. Integration processes by their nature are, therefore, technically quite demanding in themselves; and especially where particularly highly specialized or legacy system-to-integrations are concerned- require quite a significant overhaul of existing infrastructure.

-

Interpretable Issues: For most AI applications, observability is robust. It might not be easy, to understand why a particular model or agent behaves in a specific way. More so for LLMs, biased or unusual outputs could be only well understood in depth by additional domain expertise.

What is the biggest limitation of observability tools?

Managing large volumes of telemetry data efficiently.

What Are the Future Trends in Agentic Observability?

The observability of AI shortly would be oriented toward self-optimizing agents. They would have the ability to learn from observations and optimize themselves in real-time. This would lead to reducing the need for human intervention for debugging or optimization and hence lead to more autonomous systems.

-

Predictive Observability: It is another trend gaining momentum. Instead of monitoring agents in real-time on the expected events, the future systems will predict failures or possibly suboptimal behaviors ahead of time, thus allowing for pre-emptive adjustments.

-

Advanced Multi-Agent Coordination: Observability tools will be able to vastly control more complicated multi-agent systems containing tens of cooperating agents. Advanced tracing will have to be improved enough so that developers can trace interactions down to very complex systems in decentralized systems.

-

Edge AI Monitoring: As more AI moves out to the edge, it will prove a nice challenge for observability tools to adapt to such decentralized edge systems. It brings in a whole new set of challenges and opportunities for ensuring that the level of observability remains as effective in distributed environments as it is on centralized premises.

Conclusion: Why Agentic Observability with LangSmith and AgentOps Is Critical?

As AI agents become more central to critical operations across industries, having robust observability systems like LangSmith and AgentOps will be essential. These platforms not only offer transparency into the inner workings of these systems but also provide powerful tools for debugging, optimizing, and scaling AI operations. From predicting machine failures in factories to managing trading bots in finance, observability transforms the way we manage AI, making systems more reliable, efficient, and understandable.

With the adoption of observability, businesses can realize full capacity in their AI systems, such that agents would be able to act and learn with strategy objectives. The future of observability promises even more automation, predictive power, and adaptability, laying the groundwork for the next generation of intelligent, autonomous systems.