Executive Summary

SAIF Aviator is a next-generation autonomous visual-AI platform that empowers organizations to deploy multimodal vision agents, edge-native decision workflows, and continuous self-learning pipelines.

For instance, one of our industrial automation clients—a leading smart infrastructure operator with multi-site drone and sensor deployments across Asia—leveraged SAIF Aviator to unify visual inspection, safety monitoring, and maintenance workflows. The deployment resulted in a 65% reduction in manual workloads, 40% faster deployment cycles, and significant improvements in real-time decision accuracy.

With its unified agent framework, edge-first autonomy, and model lifecycle management, SAIF Aviator helps enterprises accelerate deployment, reduce operational burden, and enhance situational awareness across complex environments such as manufacturing, logistics, and smart infrastructure.

Customer Challenge

Customer Information

-

Solution: Autonomous Visual-AI Platform for Multimodal Vision Agents & Edge-Native Decisioning

-

Industry: Smart Infrastructure & Industrial Automation

-

Location: Asia-Pacific (APAC) Region – Multi-country operations

-

Scale: Large Enterprise

Business Challenges

The customer, a large infrastructure and industrial automation enterprise, faced increasing pressure to automate visual intelligence workflows across geographically distributed sites. Despite investing in traditional computer vision systems, their teams struggled with scalability, operational efficiency, and decision latency.

Key business challenges included:

-

High operational dependency on manual monitoring, leading to slow incident response and inconsistent insights across facilities.

-

Fragmented visual AI deployments, with no unified platform to scale models and manage agents across multiple locations.

-

Extended deployment cycles due to complex integration with existing systems and workflows.

-

Need for low-latency, edge-native decisioning to support operations in remote and bandwidth-constrained environments.

-

Compliance and data sovereignty constraints, limit flexibility to use public cloud services for mission-critical workloads.

By addressing these business pain points, SAIF Aviator positioned itself as a strategic enabler for autonomous, compliant, and scalable visual intelligence across the customer’s global operations.

Technical Challenges

-

Legacy computer vision systems built as one-off solutions struggling to scale across devices and regions

-

Absence of a unified agent framework leading to siloed models, inefficient retraining and operational overhead

-

Latency, connectivity and reliability issues for edge-based inference (drones, sensors, remote devices)

-

Lack of lifecycle automation: from ingestion → model updates → monitoring → retraining

-

Weak integration of multimodal (image, video, live feed) pipelines, and difficulty in deploying to embedded/edge environments

Partner Solution

Solution Overview

SAIF Aviator delivers a composable architecture combining a unified agent framework, multimodal vision AI engines, and edge-first autonomous execution. It supports the full lifecycle of visual agents—from data ingestion, model deployment, feedback loop and continuous learning—across cloud, edge and embedded devices.

Capabilities & Features

-

Multimodal Vision AI Engines: Supports images, video, live-feed inference across complex real-world environments.

-

Unified Agent Framework: Allows developers, AI scientists and ops teams to create, deploy and refine autonomous visual agents in a single platform.

-

Edge-First Autonomy: Native deployment on drones, embedded sensors and edge devices with minimal latency, enabling autonomous actions in situ.

-

Intelligent Lifecycle Management: Automated pipelines for model versioning, monitoring, retraining and feedback loops ensuring agents evolve and stay performed.

-

Additional differentiators: on-device inference and storage; federated learning; configurable agent workflows; cross-cloud hybrid compute support.

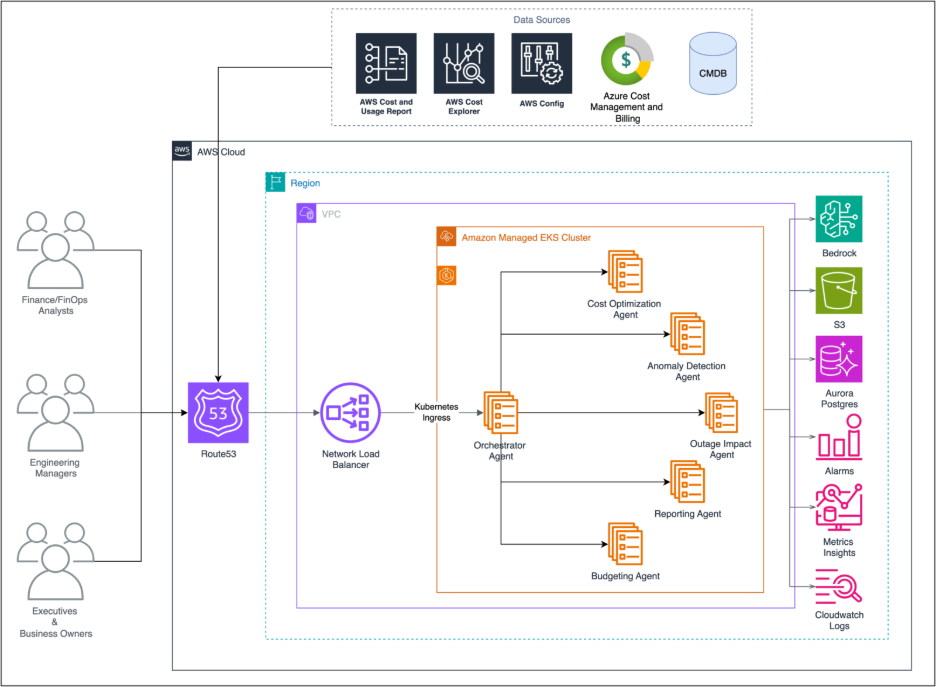

Architecture Diagram

Implementation Details

-

Deployment Methodology: Utilised agile sprints, starting with a pilot on edge devices (e.g., drone sensors) followed by industrial roll-out.

-

Integration: Plug-and-play pretrained models for key industries (manufacturing, logistics, infrastructure) shortened development time. nexastack.ai

-

Edge deployment: Deployed SAIF Aviator agents onto embedded sensors and drones for real-time, on-device inference.

-

Lifecycle automation: Set up continuous monitoring and feedback loops for live model retraining and adaptation.

-

Hybrid compute: Platform enabled seamless switching between cloud, private cloud and edge compute to meet data-sovereignty/compliance requirements.

Timeline

|

Phase |

Description |

Duration |

|

Phase 1 – Design & Planning |

Requirement analysis, architecture design, and pilot scoping for Edge AI deployment (covering drones, embedded sensors, and on-prem systems). |

2 weeks |

|

Phase 2 – Development & Integration |

Deployment of SAIF Aviator core components (Unified Agent Framework, Multimodal Vision AI Engines). Integration with AWS EKS, S3, and SageMaker pipelines. |

3 weeks |

|

Phase 3 – Pilot Deployment |

Edge-first deployment on selected drones and embedded devices, validation of inference accuracy, and optimization of latency. |

4 weeks |

|

Phase 4 – Full Rollout |

Multi-site rollout, automation of lifecycle workflows, and enablement of continuous learning/retraining loops. |

6 weeks |

|

Phase 5 – Optimization & Continuous Improvement |

Establishing monitoring dashboards (QuickSight), retraining pipelines, and feedback loops for ongoing model evolution. |

Continuous |

Innovation & Best Practices

-

Leveraged a modular agent-based architecture to scale visual AI across devices and domains rather than one-off solutions.

-

Embraced edge-first deployment to minimise latency and empower autonomous decisioning where connectivity is limited.

-

Embedded continuous learning pipelines enabling agents to improve based on live feedback rather than static retraining flows.

-

Adopted hybrid compute strategy enabling enterprise-grade compliance and scalability.

-

Applied DevOps and model-ops best practices: CI/CD for models, unified observability across agents, version control for agents and models.

Results & Benefits

Business Outcomes

-

Up to 65% reduction in manual workloads through autonomous visual agent operation.

-

Deployment cycles were cut by over 40%, thanks to pretrained industry models and plug-and-play architecture.

-

Decision accuracy significantly improved (e.g., “97% of organizations reported dramatic improvement” from SAIF Aviator usage).

-

Field productivity and response efficiency improved in 82% of users.

Technical Benefits

-

Scalable agent framework: one platform to manage agents across edge and cloud.

-

Reliable low-latency inference on edge devices, enabling real-time autonomous actions.

-

Automated lifecycle management reduced technical debt and manual model maintenance.

-

Cross-cloud and hybrid deployment flexibility guaranteeing compliance and data control.

Customer Testimonial

“With SAIF Aviator we transformed our vision-AI operations — deployment went from months to weeks, and our field agents are making decisions autonomously in real time.”

Lessons Learned

Challenges Overcome

-

Navigating edge deployment constraints (connectivity, device heterogeneity) required robust on-device caching and hybrid fallback strategies.

-

Integrating legacy systems and visual pipelines meant building flexible adapters and ensuring model compatibility.

-

Ensuring continuous learning without data leakage or drift required federated learning and feedback monitoring.

Best Practices Identified

-

Start with a pilot on edge devices to validate latency, reliability and integration before large-scale roll-out

-

Use modular agent frameworks to future-proof deployments across domains rather than fixed solutions

-

Automate the full lifecycle (ingestion → inference → monitoring → retraining) to keep agents performant

-

Architect hybrid compute from day one to meet enterprise-grade compliance and scalability requirements

Future Plans

Looking ahead, the roadmap includes:

-

Integrating more advanced sensor modalities (LiDAR, thermal imaging) into SAIF Aviator agents

-

Expanding into predictive maintenance and anomaly detection for industrial fleets

-

Building a digital twin layer for simulation and training of visual agents before live deployment

-

Rolling out global edge-clusters, enabling truly distributed autonomous vision‐AI at scale

Next Steps

Talk to our experts about transforming real-time visual intelligence and autonomous decisioning with SAIF Aviator. Discover how industries and departments leverage agentic workflows and decision intelligence to become truly decision-centric, using compound AI systems that automate and optimize IT operations, enhance responsiveness, and drive intelligent efficiency across the enterprise.

.png)