Executive Summary

Enterprises operating at scale face the challenge of managing high customer interaction volumes across multiple channels — voice, chat, and digital touchpoints. To address these complexities, we developed the Customer Service Manager AI Agent, an AI-powered, AWS-native automation platform that transforms customer service operations into an intelligent, predictive, and scalable ecosystem.

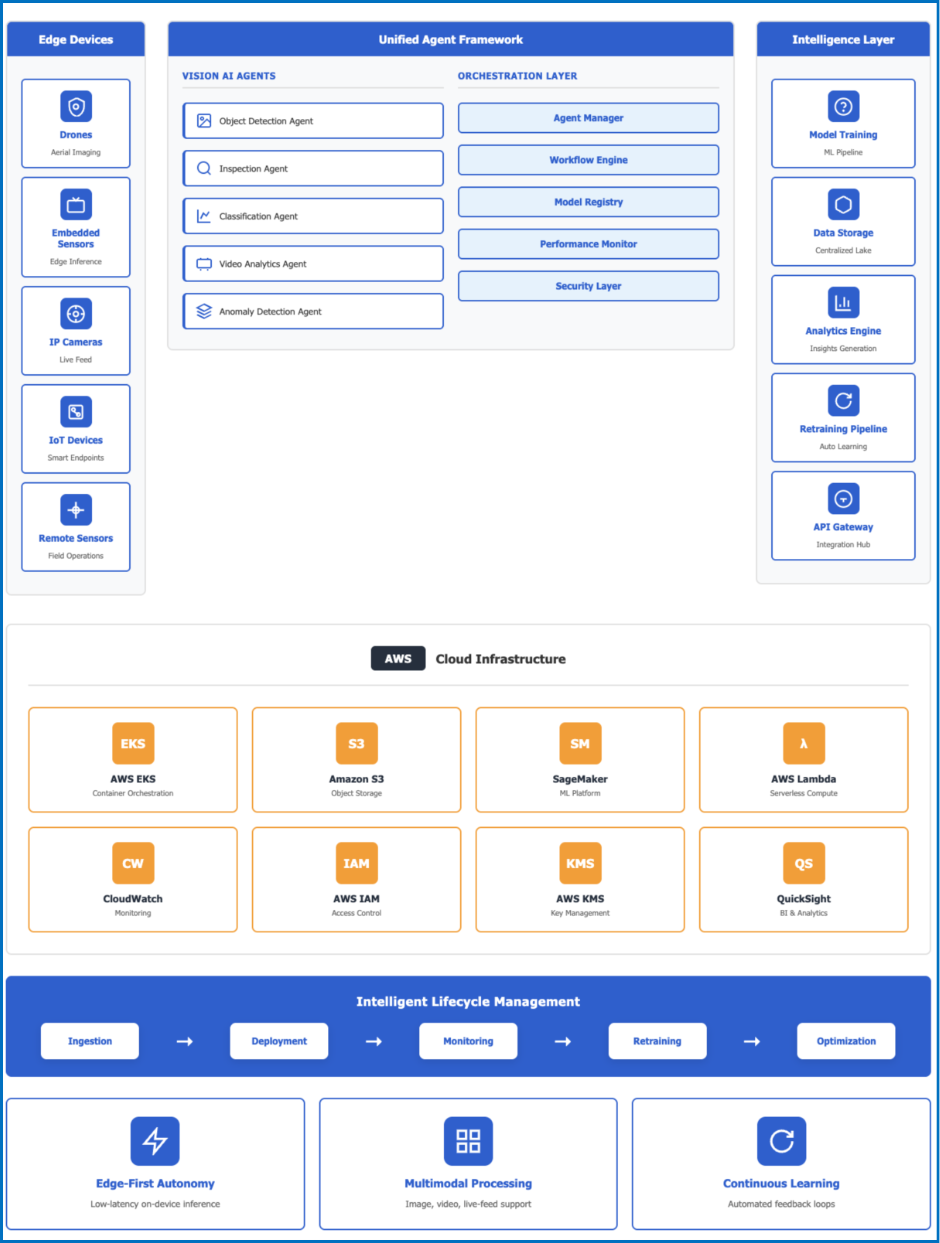

Deployed on Amazon EKS and powered by Amazon Bedrock, the solution leverages a multi-agent orchestration model that automates customer segmentation, behavior analysis, sentiment tracking, lifetime value prediction, feedback insights, and real-time agent assistance. Integrated with communication channels, it enables real-time engagement data, while ensuring compliance, observability, and human-in-the-loop collaboration. It is a Scalable, secure, and data-driven customer service platform that delivers consistent, intelligent engagement powered entirely by AWS.

Customer Challenge

Customer Information

Business Challenges

Enterprises managing thousands of customer interactions daily across email, and chat channels faced growing operational strain and inconsistent service outcomes. Traditional CRM and contact center systems lacked intelligence, requiring manual triage, manual routing, and repetitive human validation.

Key challenges included:

-

Compliance pressure to maintain data privacy and auditability across regions.

-

Lack of predictive analytics for churn risk, LTV, or dissatisfaction

The enterprise sought a unified, AI-first solution that could automate customer service workflows, improve operational transparency, and provide predictive insights — while being secure, resilient, and AWS-native.

Technical Challenges

Building an AI-driven customer experience intelligence platform like the Customer Service Manager AI Agent introduced several complex engineering challenges, particularly in enabling real-time behavioral analysis, sentiment reasoning, customer segmentation, and churn prediction across thousands of concurrent interactions flowing through Slack, Teams, email, web chat, and CRM systems.

The first challenge was achieving low-latency sentiment, behavioral, and LTV signal extraction at scale. Each incoming message—whether from Slack, Teams, or email—needed to be analyzed in real time for tone, emotion, engagement patterns, and dissatisfaction indicators. To achieve this, the solution was deployed on Amazon EKS, where each micro-agent (such as Sentiment Analysis, Behavioral Insights, or LTV Prediction) operates as an independent containerized service with Horizontal Pod Autoscaling (HPA). Amazon Bedrock provided access to foundation models for language understanding, reasoning, and emotion detection. Together, EKS and Bedrock enabled the elasticity and parallelism necessary to sustain sub-second behavioral interpretation and risk scoring during peak activity windows.

Secondly, maintaining context continuity and data traceability across multiple communication channels posed a significant engineering challenge. The system needed to correlate Slack threads, Teams conversations, email chains, survey responses, and profile data while retaining a unified historical view of the customer’s journey. A multi-layer context engine was implemented using Amazon DynamoDB, Amazon Neptune, and Amazon S3 (with versioning enabled). This architecture ensured that every interaction—across any channel—remains traceable, reproducible, and auditable. It also enabled longitudinal analysis for churn prediction, segment movement, and behavior trend detection.

Another key challenge was designing a declarative, modular multi-agent orchestration pattern. Each agent—such as Segmentation, LTV Analysis, Escalation Risk Detection, Feedback Intelligence, or Agent Assist—needed to function independently while remaining loosely coupled and synchronized. A central Orchestrator Agent, deployed on Amazon EKS, handled asynchronous communication, lifecycle management, and shared context propagation across all functional agents. This ensured high throughput, isolation of failures, and non-blocking workflows while maintaining coherent, end-to-end reasoning across customer interactions.

Ensuring data security, privacy, and explainability was another critical concern. Because the system processes sensitive customer information—including chat transcripts, behavioral data, sentiment profiles, and feedback records—it required strict end-to-end encryption, granular IAM access controls, and AWS KMS-managed key protection. Compliance with organizational and regional data governance policies required additional safeguards such as secure API gateways, request signing, and auditable access patterns.

The platform also required deep observability and continuous performance tuning to operate reliably at scale. Traditional monitoring techniques were insufficient for distributed, multi-agent processing pipelines. Amazon CloudWatch, AWS X-Ray, and Amazon SNS were integrated to provide full-stack visibility into inference latency, sentiment processing throughput, error propagation, and pod-level anomalies. This observability layer enabled proactive detection of slow LLM responses, communication bottlenecks, or state desynchronization events—reducing operational overhead and improving system resilience.

Together, these challenges required the creation of a cloud-native, modular, and interpretable multi-agent system capable of analyzing, reasoning, and scaling autonomously—forming the foundation that enables the Customer Service Manager AI Agent to deliver trusted, high-performance, and data-driven customer experience intelligence on AWS.

Partner Solution

Solution Overview

XenonStack implemented the Customer Service Manager AI Agent, leveraging:

Key Components

AWS Services Used

|

Service

|

Purpose

|

|

Amazon EKS

|

Hosts containerized agents for orchestration and classification.

|

|

Amazon Bedrock

|

Provides LLM reasoning, summarization, and ticket classification intelligence.

|

|

Amazon Connect

|

Enables customer voice and chat interactions through AWS’s contact center platform.

|

|

AWS Lambda

|

Acts as an event router between Connect, Bedrock, and EKS for seamless orchestration.

|

|

Amazon DynamoDB

|

Stores ticket states, customer profiles, and metadata for workflow continuity.

|

|

Amazon Neptune

|

Maintains contextual relationships across tickets, agents, and customers.

|

|

Amazon ElastiCache (Redis)

|

Provides real-time, low-latency caching for session-level memory.

|

|

Amazon S3

|

Stores logs, transcripts, and historical data for analytics and auditability.

|

|

Amazon QuickSight

|

Delivers CSAT, SLA, and MTTR performance dashboards.

|

|

Amazon CloudWatch & AWS X-Ray

|

Provides observability, tracing, and health monitoring of all agents.

|

|

AWS IAM, KMS, Secrets Manager

|

Secures data, credentials, and encryption keys across services.

|

Architecture Diagram  Implementation Details

Implementation Details

The implementation followed an Agile, sprint-based delivery model executed over a 10-week period, involving iterative feature development, integration testing, and continuous stakeholder feedback. The engagement began with structured discovery sessions that included branding stakeholders, ML engineers, and DevOps architects to define key design automation workflows, governance requirements, and scalability objectives.

Phase 1 – Design & Architecture:

Defined the agent orchestration model and AWS architecture configuration for EKS clusters, IAM roles, and network security controls.

Phase 2 – Core Agent Deployment:

Deployed sentiment, behavior, segmentation, LTV, feedback, and risk-detection agents.

Phase 3 – Integration & Data Flow:

Slack, Teams, CRM, and email workflows integrated via webhook and event triggers.

Phase 4 – Analytics & Dashboards:

Configure Amazon QuickSight dashboards for real-time SLA, CSAT, and response-time insights.

Phase 5 – Security & Observability:

Implemented IAM-based access control, KMS encryption, and CloudWatch metrics. Deployed CloudWatch alarms and SNS notifications for performance anomalies.

Phase 6 – Testing & Rollout:

Performed functionality, load and chaos testing to ensure consistent performance under peak traffic.

Innovation and Best Practices

-

AWS Well-Architected Framework: Applied to all five pillars — operational excellence, security, reliability, performance efficiency, and cost optimization.