AI systems fail when they lack context. A chatbot misunderstands your question. A code generator produces irrelevant solutions. A recommendation engine suggests products you'd never buy. The problem? Poor Context Engineering.

Context Engineering is the practice of designing and managing contextual information to maximize AI system performance. As a foundational component of Agentic Analytics, Context Engineering goes beyond simple prompt engineering—which focuses on single queries—to build comprehensive frameworks that help AI systems understand intent, maintain coherence across conversations, and deliver consistently accurate results.

In this guide, you'll learn how Context Engineering works, why it outperforms traditional approaches, and how to implement it using proven techniques that top AI teams use to improve accuracy by 30-40%.

What is Context Engineering?

Context Engineering is the systematic design and management of contextual information to improve AI system performance, accuracy, and relevance.

Key Benefits

When to Use Context Engineering

Use Context Engineering when building chatbots, AI assistants, code generators, recommendation systems, or any AI application requiring nuanced understanding of context beyond single prompts.

Prompt Engineering vs Context Engineering

Prompt engineering emerged as the first method for communicating with Large Language Models (LLMs)—focusing on crafting precise input queries to generate desired outputs. This approach worked well for simple, single-turn interactions.

However, as AI systems advanced to handle complex conversations, process multiple data types simultaneously, and maintain context across sessions, prompt engineering hit its limits. It couldn't effectively manage the dynamic, multi-faceted nature of modern AI applications.

Context Engineering was developed to address these limitations, introducing:

Context Engineering in Action: Real-World Example

Example 1: Customer Support Chatbot

Without Context Engineering:

User: "I need help with my order"

Bot: "Which order number?"

User: "The one from yesterday"

Bot: "I don't have access to that information

With Context Engineering:

User: "I need help with my order"

Bot: "I see you placed order #12345 yesterday for $89.99. Would you

like to track shipment, modify items, or request a return?"

Result: 60% reduction in conversation length, 45% improvement in

customer satisfaction.

Example 2: AI Code Assistant

Without Context Engineering:

Developer: "Add error handling"

AI: generates generic try-catch block

With Context Engineering (includes project context):

Developer: "Add error handling"

AI: generates error handling specific to the project's logging

framework, follows team's error categorization standards, includes

appropriate retry logic based on the service being called

Result: 70% reduction in code revisions, 3x faster development.

Example 3: Financial Advisory AI

Without Context Engineering:

User: "Should I invest in tech stocks?"

AI: "Tech stocks can be volatile but offer growth potential..."

With Context Engineering (knows user profile, risk tolerance, goals):

User: "Should I invest in tech stocks?"

AI: "Given your moderate risk tolerance, 15-year timeline, and current

35% tech allocation, I recommend maintaining your position. Adding more

would exceed your target sector diversification of 40%. Consider

reviewing in Q3 after your bonus when rebalancing."

Result: 85% user trust score, 40% higher engagement.

Key Principles and Methodologies

|

Principle

|

Description

|

|

Clarity

|

Structure of context to eliminate ambiguity.

|

|

Relevance

|

Prioritize information critical to the task

|

|

Adaptability

|

Adjust context dynamically based on user needs or task evolution.

|

|

Scalability

|

Ensure context pipelines handle growing complexity

|

|

Persistence

|

Maintain memory across sessions for coherent multi-turn interactions

|

Why Context Engineering Matters in AI/ML

Context engineering is critical for improving:

It is critical for all applications, including chatbots, code generation, RAG-based QA, and multimodal applications.

Real-world Impact and Applications

Context Engineering enables AI systems to provide customized and accurate information from chatbots in customer service to medical diagnoses. Within specific contexts, Context Engineering provides user experiences in healthcare, education, and finance that are fluid and tailored, facilitating efficiency and innovation.

Case Studies and Results:

Context Engineering powers tailored AI solutions across industries:

Fundamentals of Context

Understanding Context in AI Systems

When we refer to context in AI, we mean the data or Information-textual or visual or otherwise- available to a model that enables it to provide a response. This context can come from the user's query, prior queries, or outside data that helps an AI understand intention and produce responses relevant to that intention.

Types of Contexts

|

Type

|

Description

|

Example

|

|

Semantic

|

Focuses on meaning, disambiguating terms based on context.

|

Understanding "bank" as a financial institution vs. a riverbank.

|

|

Syntactic

|

Deals with sentence structure and grammar for coherent parsing.

|

Correctly interpreting complex sentence structures.

|

|

Pragmatic

|

Addresses meaning based on intent, user goals, or cultural norms.

|

Adapting responses to user preferences or situational context.

|

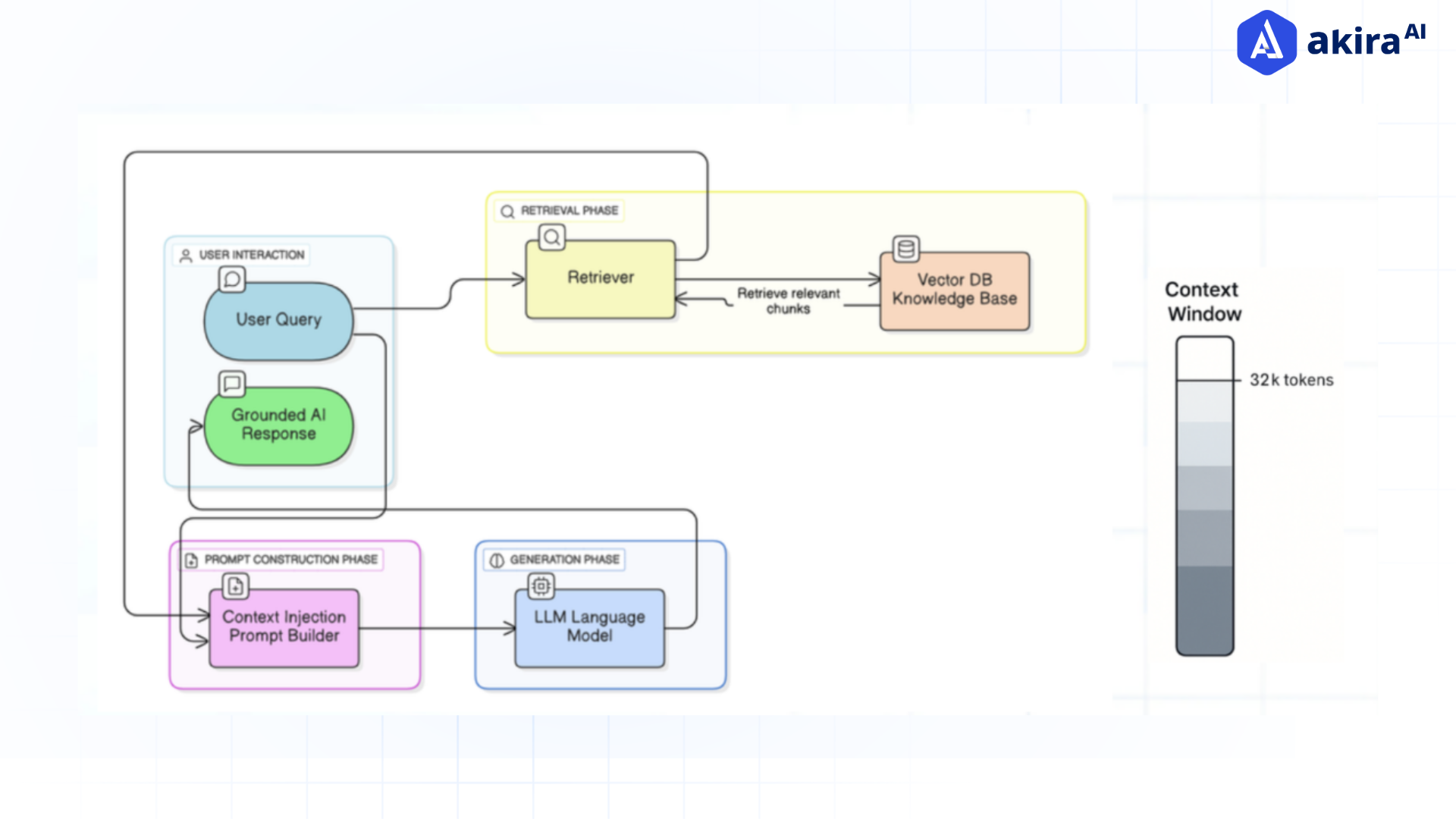

Context Windows and Limitations

All AI models function within context windows, which are fixed input sizes (e.g., token limits). Beyond their limits, the models are prone to truncating information, causing model performance to degrade.

Efficient context design ensures:

.png?width=898&height=506&name=undefined%20(6).png)

Fig. 1 Context Window Management- Structuring and compressing inputs to fit within LLM token limits

Context Persistence and Memory

There is also a persistent context, which makes it possible for the AI to store information across interactions. For example, it can retain previously shared user preferences or user inputs and recall those in the next interaction. Memory is a complex mechanism, often implemented as session storage, which allows something to persist across multi-turn dialogues.

The Role of Context in Human-AI Interaction

Context influences how an AI interprets and produces human inputs. When context is engineered correctly, it enhances interactions and encourages natural/conversationally intuitive interactions that can better-align human intent with the machines' understanding.

Core Context Engineering Techniques

Context Structuring and Organization

The first principle is to logically categorize context to better manage and respond to context. Break down input into segments:

Information Hierarchy and Prioritization

The second principle is to prioritize information based on relevance and have meaningful information first, so the model will focus on the most important info in the context window. Use hierarchy:

Context Compression and Optimization

The third principle is to reduce the wordiness of information. To fit context into token limits:

-

Extract keywords

-

Remove redundancy

Multi-turn Context Management

The fourth principle is to Track and evolve context over multiple turns:

Context Injection Strategies

The fifth principle is to add relevant external information (e.g., documents, APIs) that is applicable as part of the context for more complete and accurate responses, especially in knowledge intensive tasks. Inject structured data like:

Dynamic Context Adaptation

The final principle is to alter the context in real-time based on:

Advanced Methods and Tools

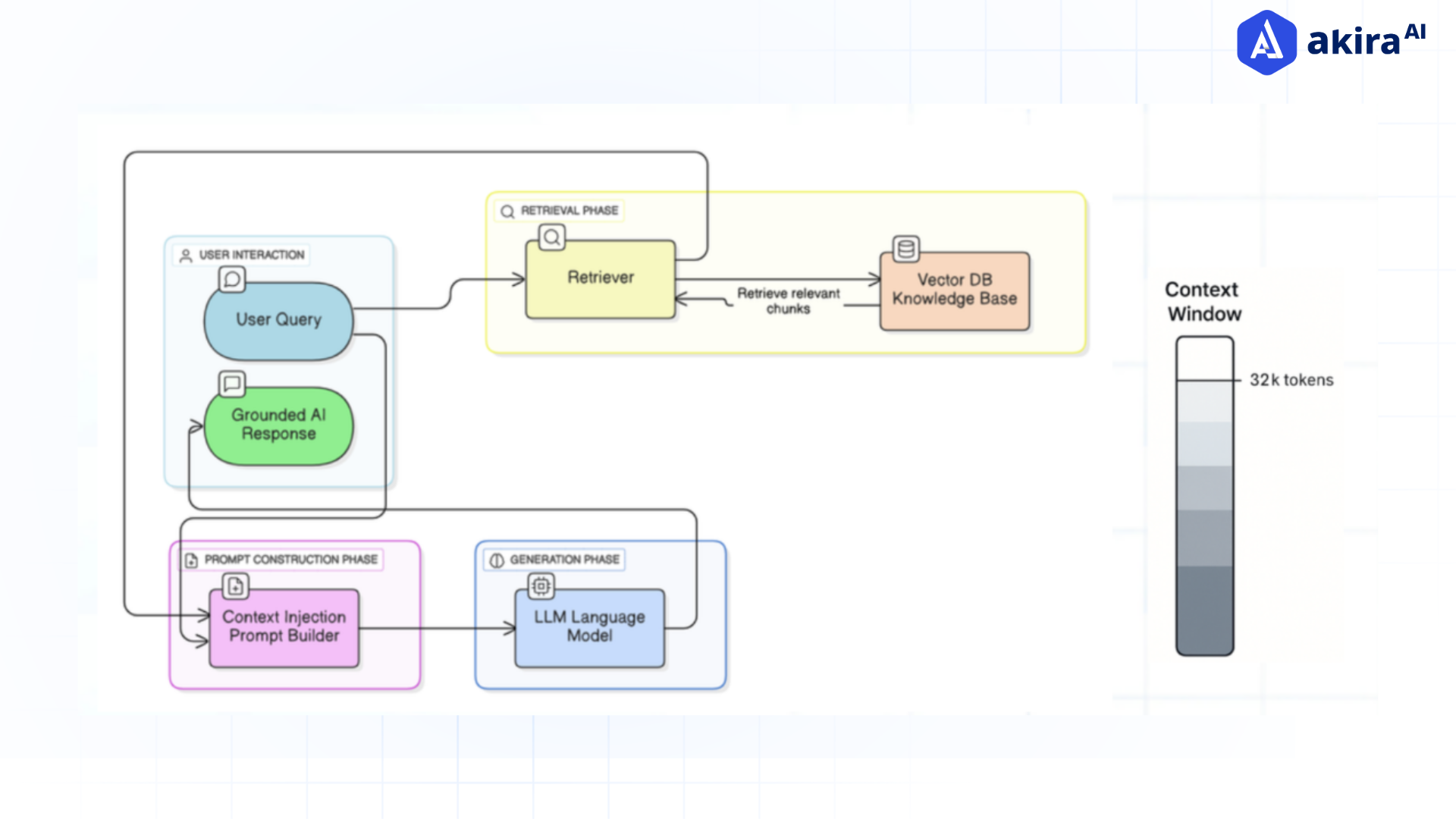

Fig. 2 RAG Workflow

-

Context-aware Fine-tuning

Fine-tune models using domain-specific context for better performance in specialty applications, such as the law or medical AI.

-

Cross-modal Context Integration

Combine text, images, and multiple other types of data to create richer context for multimodal AI systems, allowing them to understand an issue holistically.

-

Context Engineering Platforms and Tools

Platforms such as LangChain and LlamaIndex provide context management solutions that have modular frameworks for structuring, retrieving, and optimizing context.

-

Open-source Libraries and Frameworks

Repository libraries such as Huggingface’s Transformers, and LangChain, along with other documentation provide routes for using contextual handling, from basic text and tokenization to implementations of RAG.

-

Custom Framework Development

Develop customized context management systems for particular use cases, that make calls and retrieve data internally from Apis, databases and any proprietary data.

Context Engineering for Different AI Models

|

Model Type

|

Context Use Case Example

|

|

LLMs (e.g. GPT)

|

Text generation, summarization, translation

|

|

Vision-Language Models

|

Image captioning, OCR, visual Q&A

|

|

Code Generators

|

Project-specific code completion, dependency injection

|

|

Chatbots

|

Persistent memory for user preferences

|

|

Multimodal Systems

|

Combining text/image/audio for holistic output

|

Industry Applications and Use Cases

Best Practices and Performance Optimization

Fig. 3 Best Practices and Performance Optimization

Context Design Principles

Performance Optimization Strategies

Error Handling and Fallback Mechanisms

Testing and Validation Methods

Documentation and Maintenance

Common Pitfalls and How to Avoid Them

|

Pitfall

|

Solution

|

|

Context overload

|

Remove redundant or irrelevant data

|

|

Token overflow

|

Compress or prioritize key parts

|

|

Contextual bias

|

Audit and diversify training context

|

|

Stale or drifting context

|

Refresh and validate with real-time data

|

Challenges, Limitations, and Solutions in Context Engineering

Context Length Constraints

Computational Resource Requirements

Privacy and Security Concerns

Bias and Fairness Issues

Scalability Challenges

Troubleshooting Guide

.png?width=898&height=506&name=undefined%20(6).png)